Abstract

Private nonprofit colleges are increasingly using tuition resets, or a decrease in sticker price by at least 5%, to attract new students and counter declining demand. While discounting tuition with institutional aid is a common practice to get accepted students to matriculate and to increase affordability, a tuition reset is a more transparent approach that moves colleges away from a high aid/high tuition model. The authors find minimal evidence that these policies increase student enrollment in the long run, but that there may be short-term impacts. As expected, institutional aid decreases and varies directly with the size of the sticker price reduction. The average net price students pay decreases, but this effect may be driven by changes in the estimated non-tuition elements of the total cost of attendance. Finally, net tuition revenue appears unrelated to tuition resets. These findings call into question the efficacy of this practice.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

College prices have been increasing at steep rates. The published tuition price at private nonprofit four-year institutions has more than doubled in the past 30 years to an average sticker price of $37,650 in the 2020–2021 academic year (Ma et al., 2020). Although the ultimate price students pay is lower than the sticker price, once subtracting grants, scholarships, and loans, Americans increasingly believe that college is not worth the price (Marken, 2019). The combination of these forces is leading to decreased demand for some private colleges and universities (Krupnick, 2018). For instance, the number of students enrolled across private nonprofit colleges has declined modestly from an all-time high in 2015 (Hussar et al., 2020), and the decline appears to have accelerated during the first two years of the COVID-19 pandemic (National Student Clearinghouse, 2022). These declines are compounded around a growing concern about a potential enrollment cliff due to slowing birth rates and fewer high school graduates in the pipeline to attend college (Grawe, 2018). These declines in enrollment are worrisome as many private colleges are tuition dependent and rely on students to fill seats. Unlike public colleges and universities that have historically relied on state appropriations as their primary revenue source, private colleges rely on student tuition and fees as their main revenue source (Smith, 2019). Private institutions, in particular, are now seeking new enrollment and revenue management strategies to address this declining demand.

In response to decreasing demand, a growing number of private colleges are instituting “tuition reset policies” to attract new students and ostensibly increase demand among students. This practice has increased over time and became an important tool during the pandemic to attract students as demand decreased (Seltzer, 2020). Tuition reset policies simply lower, or reset, the published tuition price by at least five percent in a single given year, though the size of the reset can vary widely (Lapovsky, 2019). Consider the case of Utica College, a small liberal arts college located in the state of New York. In 2016, Utica slashed its sticker price by nearly $14,500 (42%) from the previous year to a published price of $19,996. Utica College is just one of about 63 colleges and universities, and increasing, adopting such a policy across the country in hopes of enhancing enrollment and revenue. This pricing strategy is similar to institutionally funded financial aid, or tuition discounting more generally, which allow institutions to strategically subsidize the cost of attendance for students via grants and scholarships (Breneman et al., 2001; Cheslock, 2006; Hillman, 2012). For example, enrollment managers deploy institutional financial aid as merit-aid or non-need-based aid which allow them to strategically select their incoming class of students by targeting grants toward athletes, economically disadvantaged students, and/or academically successful students (Hillman, 2012). However, tuition resets take an alternative approach to discounting whereby colleges cut published tuition for all potential matriculants instead of allocating aid based on institutional priorities and student needs.

Although deciding which and how many students enroll is important, discounting and reset policies ultimately seek to increase net tuition revenue (i.e., revenue minus aid and other activity costs) (Cheslock, 2006). In a “high aid/high tuition model,” published tuition and fees may be perceived as too high or expensive, however, many students who enroll ultimately benefit from some form of financial aid and thus do not ever pay the advertised cost of attendance (Hearn et al., 1996; Turner, 2018). This high aid/high tuition model allows institutions to benefit from students who would pay this high tuition price, while providing students with limited means or willingness to pay for school an opportunity to enroll by awarding them institutional aid. The high aid/high tuition approach is evidenced by trends over the past two decades in net tuition revenue staying relatively flat among private four-year colleges, despite sticker price increasing at a much higher rate (Ma et al., 2020). This tuition discounting process is more common at private four-year institutions by a factor of nearly two to three times that of public institutions (Baum & Ma, 2010).

Reset policies respond directly to the high aid/high tuition policies many private institutions implement. By lowering the sticker price, families and students may be less intimidated by high college costs and more likely to apply and ultimately enroll in colleges and universities, in theory, leading to increased revenue. However, if net tuition remains steady, as it has over the past 15 years, we expect a decrease in published tuition price to necessitate changes in other net price determinants, namely financial aid expenditures. This study focuses on tuition resets and seeks to examine the policies’ utility as a viable enrollment and revenue management strategy among private bachelor’s-granting colleges. The following research questions guide our study:

-

1.

Does a tuition reset affect enrollment, and do these effects vary over time or by the size of the reset?

-

2.

Does a tuition reset affect institutional aid expenditures, and do these effects vary over time or by the size of the reset?

-

3.

Does a tuition reset affect students’ net price and institutions’ net tuition revenue, and do these effects vary over time or by reset size?

To answer these questions, we use a panel dataset of private nonprofit bachelor’s-granting colleges between 2009 and 2018 and employ a difference-in-differences strategy to estimate effects of these policies. We find that, on average, overall fall enrollment remained relatively stable once institutions adopted these policies. Financial aid expenditures, both total and per full-time-equivalent students, appeared to decrease, as expected, with an overall cut across published tuition price. We also find that net price decreased significantly, however, these decreases were accompanied by decreases in the estimates non-tuition aspects of total cost of attendance. Net tuition revenue per full-time equivalent enrolled student remains stable, suggesting that resets do not yield tuition cost savings for students. These results are robust to several threats to internal validity and design specifications. The results have several implications for institutional policy and practice, chiefly demonstrating that tuition discounts may not be a more effective or sustainable strategy as it relates to enrollment management for revenue maximization over time.

Literature Review

Student Price Response

Given the rising cost of college over the last three decades, much research has focused on student enrollment responses to changes in the listed tuition price, known as the Student Price Response Coefficient (Heller, 1997, 1999; Kim, 2010; Langelett et al., 2015; Leslie & Brinkman, 1988; Manski & Wise, 1983). Generally, research on enrollment responses to tuition levels suggests that for every $100 increase in tuition, the probability of enrolling decreases between one and two percentage points, on average, among all students after accounting for economic factors (Heller, 1997, 1999; Kim, 2010; Leslie & Brinkman, 1988). Heller (1999), however, finds that responses vary by significantly by race. For instance, White students are less responsive to price, whereas Black and Hispanic students, across two- and four-year colleges, are much more sensitive to price. Given shifting population dynamics, these differences in price response across demographic groups is important for informing the potential use of tuition resets as a recruitment tool.

Kim (2010) synthesized the literature focusing on the effect of prices on enrollment among private nonprofit institutions. Consistent with the research focused on public college enrollment, they also found negative enrollment responses with tuition increases among private nonprofits. Buss et al. (2004) also find a negative relationship between tuition prices and enrollment at selective liberal arts colleges. The authors provide evidence that both students who do and do not receive financial aid respond negatively to increases in tuition price. Collectively, these studies underscore the inverse relationships between net price and enrollment demands and the role of financial aid.

More recent research has shown negative relationships between price and enrollment in a variety of contexts including online education (Han et al., 2019), at public institutions (Marcotte & Delaney, 2021; Runco et al., 2019), among undocumented students (Conger & Turner, 2015), and among racial and ethnic minoritized students (Allen & Wolniak, 2019). Moreover, qualitative studies have underscored the financial pressures students face such that small increases in tuition and fees can derail students’ educational plans (Goldrick-Rab, 2016; Jones & Andrews, 2021). Taken together, evidence suggests that students respond to changes in prices, thus a decrease in sticker price may impact application and enrollment decisions.

Review of Tuition Discounting on Enrollment

Institutionally funded financial aid is just one type of financial aid for which students could be eligible, with private scholarships, state aid, and federal aid being the others. On average, research finds that any type of financial aid through the form of grants and scholarships have a positive effect on the probability of enrolling, persisting, and graduating from higher education (Deming & Dynarski, 2010; Nguyen et al., 2019). For example, in a meta-analysis of 43 experimental and quasi-experimental studies estimating the effect of grant aid on persistence and degree completion, Nguyen et al. (2019) found non-repayable grants and scholarships increased both persistence and completion between two and three percentage points. They also found that an additional $1000 in grant aid increased persistence by about two percentage points. This research suggests a positive effect of grant aid across federal, state, and institutional grants and scholarships over and above many of the factors (e.g., proximity, family obligations, etc.) that go into making these series of educational decisions unobserved by colleges. However, few studies have focused exclusively on the provision of institutionally funded financial aid, which is strictly money that the institution provides that are funded by gifts or general funds, and its impact on student enrollment and institutions (Behaunek & Gansemer-Topf, 2019; Hillman, 2012).

Much of the research examining institutionally funded aid has utilized a range of experimental and quasi-experimental techniques to estimate the effect of tuition discounting for students. For instance, Monks (2009) reported on the results of a field experiment where a small private college sent financial aid offers to half of their most highly rated and academically talented students that would otherwise get no financial aid and must pay the published tuition price. The impetus behind this experiment was due to recent budgetary constraints in the number of institutionally funded grants the college could offer. The award offer was a discount of around 17% of the sticker price. Monks found that the experiment was associated with about a three-percentage point increase in the probability of students enrolling.

van der Klaauw (2002) used a regression discontinuity design to estimate the effect of a college’s aid assignment on enrollment decisions from 1989 to 1993. In this analysis, the researcher also estimated the effect of this aid between two different groups: filers (i.e., students who filed and qualified for some type of federal financial aid) and non-filers (i.e., students who did not qualify for financial aid). Using an ability index score based on a combination of academic achievement indicators as the forcing variable, van der Klaauw found that a 10% increase in institutional aid would lead to about an 8.6% increase in the probability of enrolling among filers, those students with the greatest need. Nevertheless, the effect of tuition discounting on non-filers was also positive, albeit to a much smaller magnitude.

Birch and Rosenman (2019) also used a regression discontinuity design, leveraging an eligibility cut off of a combined value representing ACT and GPA, to examine whether an institutional merit aid program affected the likelihood of enrollment among already admitted students. On average, they found that students receiving the scholarship, which was for $2000 a year and renewable conditional on GPA and credit requirements, increased the likelihood of enrolling by 20%. In this setting, the researchers additionally modeled for students that are at the margin of enrolling, controlling for students who are “always certain to enroll” and students “not very likely to enroll.” Birch and Rosenman explain that students within this uncertain group and received the scholarship were around 30% more likely to enroll. The modeling decision of controlling for heterogeneity in the propensity to enroll provided nuance in understanding how tuition discounting can improve the chances of enrolling, particularly for undecided students. Overall, this literature suggests that students are generally sensitive to changes in tuition and specifically increases in institutional aid improves the chances of enrolling.

Tuition Reset Policies

The existing literature on tuition reset models is thin. Descriptive research offers unclear results regarding the association of these policies to enrollment. Lapovsky (2019) examined these policies at 24 institutions and found average enrollment among first year and transfer students increases two-years after the introduction of these policies at 60% of the institutions. In an analysis of 12 institutions, Armitage (2018) finds that enrollments increase during the year of the reset, but institutions do not necessarily sustain these enrollment gains. This suggests that there may be a temporal component to the effects. A significant limitation of these studies is that they used small samples and descriptive analyses, which are useful approaches to identify previously undocumented patterns and develop hypotheses but cannot provide causal estimates. What is missing from this growing body of literature is the use of quasi-experimental designs to establish plausibly causal relationships between these policies and the outcomes of interest.

Conceptual Framework

Following the work of Hillman (2012) and Behaunek and Gansemer-Topf (2019), we draw on microeconomic theory of private nonprofit college behavior as our conceptual framework (Breneman, 1994). This theory serves as the underlying framework informing tuition discounting, or institutionally funded financial aid. According to this theory, colleges and universities seek to maximize their utility (e.g., mission, reputation, revenue, etc.) by strategically distributing scarce resources (e.g., financial aid). Maximizing tuition revenue, by increasing enrollment demand, is of particular interest for private colleges given the contemporary economic pressures and circumstances leading them to be even more dependent upon tuition (Breneman et al., 2001; Cheslock, 2006; Hillman, 2012; Price & Sheftall, 2015).

Tuition reset policies specifically seek to increase demand by lowering the sticker price considerably. However, these enrollment changes hinge upon students recognizing the equivalence in net price between a $10,000 scholarship on $30,000 tuition and a flat tuition of $20,000. Discounting practices, especially in the form of prestige-named scholarships, appeal to the emotional side of students by signaling accomplishment and honor (Cheslock & Riggs, 2021). However, there is some risk associated with scholarships as they may depend on academic or athletic performance, participation in specific activities (e.g., orchestra), or fulfilling volunteering hours. From the perspective of a student, a tuition reset reduces that risk by discounting upfront. Prospect theory suggests that when students are aware of the gains of the tuition reset, via communication from the institution, they will prefer this less risky option which will increase demand (Kahneman & Tversky, 2013). From an institutional perspective, a high aid/high tuition model has been used to elicit emotional responses from students and to preserve a sense of quality that is frequently associated with price (Cheslock & Riggs, 2021, Duffy & Goldberg, 2014). However, given that public approval of higher education has decreased over time, much of which is associated with rising costs and the idea that colleges are “greedy” (Bennett, 1987; Marken, 2019), a tuition reset has been seen by institutional leaders as a public commitment to affordability that can work to counterbalance these negative views of postsecondary institutions (Lapovsky, 2019).

While enrollment is on one side of the net revenue coin, net price is on the other. In the case of tuition discounting, when institutional grants increase too much, aid expenditures can outpace sticker prices, thus decreasing net revenue (Behaunek & Gansemer-Topf, 2019; Langelett et al., 2015). Hillman (2012) found in his study of public four-year colleges engaging in institutionally funded financial aid that when tuition discount rates exceed 13%, institutions experience diminishing returns on their investment. A tuition reset moves away from a high aid/high tuition model to a lower aid/lower tuition model. It is unlikely a private institution will fully offsets aid expenditures with a decrease in sticker price. Instead, we hypothesize that a revenue focused institution will decrease aid in a way that maintains or increases net tuition revenue.

Data

We rely on institution-level data from the Integrated Postsecondary Education Data System (IPEDS) and College Scorecard from 2009 through 2018. We limit our sample to private nonprofit institutions offering a bachelor’s degree. These institutions are among the most expensive undergraduate institutions and the sector most likely to engage in tuition discounting practices (Baum & Ma, 2010). Because sticker prices and discounting practices differ at public institutions and for-profit colleges (Hillman, 2012), our examination of tuition resets focuses solely on private nonprofits. Using IPEDS data, we reduce our sample to all private nonprofit institutions that granted bachelor’s degrees from 2009 through 2018. We further reduced our sample to only include institutions for which all control variables, listed in Table 1, were available for all years of the study. This reduced our sample by 239 institutions. Additionally, we eliminated four additional institutions that reset tuition multiple times from the sample as they likely are unique cases. Table 1 includes descriptive statistics for dropped institutions, where data is available. We do not observe any important deviations from the overall sample, although it may be worth noting the average net price of dropped schools is roughly $6000 less than those in the sample and Hispanic students comprise 13% of students at dropped institutions and 8% at sample institutions.

We define and classify a tuition reset as a reduction of sticker price by five percent or more (Lapovsky, 2019). Because resets are a relatively new strategy and minimal empirical research exists on the effects of this approach, we test various other cut points for a reset in our modeling. Specifically, we use a 10%, 15%, and 20% as alternative definitions of a reset. By testing these alternative definitions, we expect to provide evidence that can support a continued conversation around tuition resets.

We identify 63 institutions in our sample with a 5% tuition reset from 2012 through 2018. We exclude institutions with a reset prior to 2012 in order to have sufficient pre-treatment data. Panel A of Fig. 1 shows the size of tuition resets in 2018 and Panel B shows the distribution of the number of resets across time. We see that resets vary and are relatively normally distributed between 5 and 53%, and the number of resets are distributed across our study period with peaks in 2014 and 2018. The distribution in the size of the resets will be important for examining the potential for heterogeneous effects across institutions, and also supports our testing of multiple definitions as larger resets may be different than smaller resets.

In this study, we seek to estimate how a tuition reset policy relates to several financial characteristics of an institution. Qualitative research suggests that institutions use resets as a marketing tool to recruit new students and a way to demystify tuition discounting practices and provide more transparency (Lapovsky, 2019). To estimate this relationship, we use six primary outcome variables: full-time equivalent (FTE) fall enrollment, total student aid expenditures, student aid expenditures per FTE, average net price, net tuition revenue per FTE, and non-tuition portions of the cost of attendance (COA). To normalize the data, we take the natural log of each outcome. We examine the effect of a reset on both the net price students pay as well as the non-tuition portion of total COA to understand how students are impacted and how colleges may adjust cost estimates as part of a reset strategy.

In accordance with previous research on tuition prices at private nonprofit college, we include enrollment percentages of IPEDS reported racial and ethnic groups, the proportion of undergraduates receiving a Pell grant, and the average acceptance rate as covariates in our models (Hillman, 2012). A small portion of institution-year observations were missing a reported acceptance rate; for these cases, we imputed an acceptance rate as the average of all other observed years for that institution. Table 1 shows descriptive statistics for the full sample and treatment and control institutions separately. The only substantial difference between the groups we observe is in the proportion of students receiving a Pell grant, which is 42% at reset institutions and 34% at non-reset institutions.

Methods

To estimate the relationship between tuition resets and our outcomes of interest, we employ a difference-in-differences (DiD) approach. DiD is used to estimate an effect of an exogenous change. We recognize that institutions actively opt into tuition reset policies and thus the policy shift is not truly exogenous. While we do not presuppose our DiD estimates to be causal, we do consider this the best approach to estimate the relationship between tuition reset policies and institutional outcomes.

Our primary estimation strategy is the two-way fixed-effects (TWFE) model:

where \(Y\) is our dependent variable representing our outcomes of interest; Reset is an indicator variable equal to one for treated units in the post-policy time period; \(\gamma\) and \(\delta\) represent institution and year fixed-effects, respectively, that account for unobservable and time-invariant characteristics; and \(\theta\) is a corresponding vector of coefficients of additional covariates to improve the internal validity of our model. We cluster our standard errors at the institution level to address serial correlation in outcomes and covariates.

We also test for heterogeneous effects over time and by the size of the reset. In Eq. 2 we interact the treatment indicator with a yearly counter of the length of time since the reset, like an event study design. Colleges can be slow to adapt to changes, and we expect it may take time to balance financial aid expenditures with the tuition reset. Similarly, student demand may vary in relationship to when the reset was implemented.

In Eq. 3, we interact the treatment dummy in the TWFE model with a continuous estimate of the percentage decrease in tuition linked with the reset policy in accordance with recent work by Callaway et al. (2021). This interaction provides an estimate with how the relationship between a tuition reset policy and the outcome of interest varies by the size of the reset. The literature and our conceptual framework suggest that larger cuts in sticker price are likely to induce larger increases in enrollment and larger decreases in financial aid expenditures.

The DiD model relies on an important assumption that the treatment and comparison groups are equal in expectations (also known as the parallel trends assumption). One way to test this assumption is to examine the covariate-adjusted pre-treatment trends in the outcomes. In Eq. 4, we interact a year dummy with a binary indicator, \({Treat}_{it}\), that is equal to 1 for treatment institutions and 0 for control institutions in all years. The coefficient on this provides an estimate if the pre-treatment trend in the outcome of interest varies between the groups, which would invalidate the assumption of the groups being equal in expectation. Because of the staggered implementation, we estimate these parallel trends separately for each cohort of treatment institution. We show these in Appendix Tables 6, 7, 8, 9, and conclude the time trends across the sample are similar enough to meet this assumption.

We also conduct multiple robustness checks on our models. First, we replace our outcomes of interest with outcomes expected to have no relationship with a tuition reset. The purpose of this is to examine the possibility of our main models estimating the effect of some unmeasured exogenous shift that confounds with the tuition reset policy. We use the amount of federal appropriations an institution receives as one alternative outcomes to test our models.

Additionally, previous research suggests the TWFE model may be biased due to the staggered implementation across our treatment institutions (Goodman-Bacon, 2018). The bias may occur because the staggered treatment enables schools to toggle between treatment and control groups. This can result in institutions that reset their tuition in the middle of the treatment period receiving a larger weight in the TWFE estimate of the average treatment effect. To address these concerns, we conduct robustness checks following the work of Callaway and Sant’Anna (2020), Dettmann et al. (2020), Cengiz et al. (2019), and Gardner (2021).

First, we use the csdid command in Stata (Rios-Avila, 2021) developed to implement Callaway and Sant’Anna’s estimation strategy for staggered treatments. This approach estimates the average treatment effect by comparing treated units with untreated units thus avoiding comparing treated units across the staggered adoption of tuition resets. Second, we use the flexpaneldid command in Stata (Dettmann et al., 2019) to estimate a reweighted average treatment effect. This approach uses matching to identify a single counterfactual for each institution based on the aforementioned covariates and estimates the treatment effect using this smaller sample. Third, we estimate the cohort-specific treatment effects for all schools resetting tuition in each year (Cengiz et al., 2019). This approach provides a series of point estimates that can be examined to determine the potential for an individual cohort to be biasing the average treatment effect identified in TWFE model. Fourth, we use the did2s command in Stata (Butts & Gardner, 2021) to employ the two-stage DiD approach to estimating the weighted group-time average treatment effect on the treated institutions.

Limitations

This study examines private nonprofit colleges and thus the findings are not generalizable to all postsecondary institutions. Additionally, the small sample size of institutions that reset tuition prices may represent a unique type of nonprofit college. Although our models mostly meet parallel trends assumptions, there may be other institutional characteristics that are impacting the effectiveness of tuition reset policies including institution-specific student demand, which may be driven by regional trends, reputation, or marketing materials, among other factors. For example, colleges may accompany a tuition reset with increased marketing activities in order to publicize the change.Footnote 1 We include an institution fixed effect to help account for these institution-specific characteristics, however, more precise measurement of these characteristics would improve our estimation approach. The small sample size may also impact our ability to detect effects with sufficient statistical power. Using Schochet’s (2021) approach for power calculations in a staggered DiD design, we estimate that our minimum detectable effect size with a power of 70% is 0.10.

Findings

Table 2 provides the primary TWFE estimates from Eq. 1, Table 3 provides the event study estimates from Eq. 2, and Table 4 provides the estimates of heterogeneous effects by reset size presented in Eq. 3. Our robustness checks, discussed below, are included in the appendix in Tables 13, 14, and 15. In Table 5, we present findings from alternate definitions of tuition resets as an additional robustness check and an opportunity to better specify the definition of a tuition reset.

Main Estimates

Our first research question asks whether a tuition reset policy is associated with changes in enrollment, and whether these effects vary over time or by size of the reset. While we do not find evidence of a significant average treatment effect across all treatment years, as shown in Table 2, Table 3 provides evidence there may be a short-term increase in enrollment. Point estimates suggest a 7–13% increase in FTE undergraduate enrollment in years two, three, and four following the reset. However, the point estimate for year one of the reset is 0.01 and statistically insignificant. We discuss this pattern in more detail below in our analysis of the staggered timing effects. The estimate in Table 4 suggests that reset size is unrelated to enrollment changes following the change.

Our second research question seeks to understand the relationship between tuition resets and financial aid expenditures. We examine both the change in total financial aid and the per FTE change. We find an average decrease in total institutional aid dollars of 19%, and a decrease of 23% for per FTE aid following the reset. The decrease in both total aid and aid per FTE appears to remain relatively stable with some change in magnitude over time (i.e., larger decreases in aid), as seen in Table 3. Larger tuition resets are also associated with larger cuts to total and per FTE aid expenditures. Because the size of the reset is measured as a percentage from 0 to 1, the coefficients in Table 4 should be interpreted such that a 10% cut in tuition is associated with a 7.7% reduction in total student aid and a 9.9% reduction in per FTE aid. This interpretation assumes a linear relationship between the size of the reset and changes in student aid expenditures.Footnote 2 Under this assumption, there appears to be nearly a one-to-one trade off in the percentage changes of tuition resets and per FTE aid expenditure, whereas total aid budgets appear to decrease at a slower rate as the size of the reset increases.

Our third research question concerns the combination of expected changes in enrollment with expected changes in student aid expenditures following a tuition reset. We seek to estimate how the average net price for undergraduates and net tuition revenue for institutions relate to tuition reset policies. We find evidence that the average net price decreases by roughly eight percent following a tuition reset. This effect seems to grow over time with point estimates in later years suggesting net price decreases by 12%. We also find that larger tuition resets result in larger reductions in net price. Using the approximate tails of the distribution of reset sizes in our study, a five percent reset is associated with a 1.4% decrease in net price and a 50% cut in sticker price is associated with 14% decrease in net price, assuming a linear relationship between effect and reset sizes. However, decreases in net prices for students are also accompanied by decreases in the non-tuition portion of a student’s total cost of attendance suggesting that the “savings” students experience may be attributable to the college estimating lower cost of living expenses (e.g., housing, food, transportation, etc.) rather than a decrease in the net tuition price students pay. The magnitudes of these decreases in non-tuition COA expenses closely resemble those of the net price across Tables 2, 3, 4.

We also examined the effect of a tuition reset on net tuition revenue for institutions. The point estimate in Table 2 indicates a 5% decrease in net tuition revenue per FTE, however it is accompanied by a large standard error and is statistically insignificant. Moreover, the event study estimates show significant variability in estimates over time and few are statistically significant; there also does not appear to be a relationship between net tuition revenue and the size of the reset, as seen in Table 4. Not only does this suggest that tuition resets are not an effective way to increase per FTE net tuition revenue, it provides additional support for the idea that students’ reduced net price is largely driven by non-tuition factors.

Falsification and Robustness Checks

As a falsification check, we estimate these models using an alternative outcome that is not expected to be related to a college implementing a tuition reset: total federal appropriations. We find no evidence of effects on unrelated outcomes, which provides additional support that the effects on our primary outcomes of interest are related to the tuition reset policy. We provide results of this falsification test in Table 12 in the appendix.

Table 13, in the appendix, provides the Callaway and Sant’Anna estimates of the average treatment effects. These estimates closely match the TWFE estimates provided in Table 2 which suggests, in this specific case, the differential timing has minimal effects on the TWFE estimator. We also address the potential bias that exists due to the staggered implementation by providing estimates of the average treatment effect in accordance with the estimation strategy put forward by Dettmann et al. (2019). We present these estimates in Table 14, in the appendix. Because this approach uses matched pairs, the sample size is small and thus the standard errors are large. The estimates from this approach, however, generally confirm the size and direction of our estimated effects. The coefficients suggest a modest increase in enrollment and large decreases in student aid. While the DiD estimate for enrollment is similar to the TWFE model, the mean differences in treatment and matched control institutions suggest the effect is possibly driven by both an increase in enrollment at reset schools and a decrease at non-reset schools. Because these are matched pairs, this finding points to the possibility of students sorting across similar schools based on reset policies. The modest decrease in net price is accompanied by a small decrease in non-tuition COA. The effect on net tuition revenue is near zero, which is accompanied by virtually no change in tuition revenue from treatment or matched control institutions. These similarities between the TWFE, Callaway and Sant’Anna, and flexible conditional ATE coefficients are important for the validity of our estimates of the heterogeneous treatment effects presented in Table 4 as the approach we take in Eq. 3 does not account for the staggered timing and solely isolates the heterogeneity associated with the continuous measure of reset size.

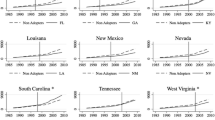

To further examine potential sources of bias in the TWFE estimates, we estimate cohort-specific effects following the approach of Cengiz et al. (2019). Because our treated sample only includes 63 institutions, each yearly cohort of treated institutions is small and thus the standard errors for the cohort-specific estimates are large. However, the point estimates can help identify patterns across cohorts and potential outliers that bias the TWFE estimates. Figure 2, in the appendix, provides the cohort-specific estimates for each of the four outcomes of interest.

The mix of positive and negative estimated effects aligns with the lack of specificity in our main estimate of the relationship between tuition resets and enrollment. The cohort-specific estimates show some evidence that late adopters may see decreases in enrollment despite the fact that our time-trend estimates in Table 3 suggest positive enrollment effects in the early years following adoption (late adopters would only have a small number of treatment years and thus would only be in the early year event study estimates). It is important to also consider that while the parallel trends assumption is generally met, it does not hold well for the 2017 cohort of adopters, which puts into question the validity of that specific estimate. This is important given that the preceding years are generally positive, albeit statistically insignificant. These points, coupled with the large standard error on the TWFE estimate of the enrollment change, lead us to be confident in our main finding that there does not appear to be a strong long-term enrollment effect from the tuition reset, but there may be short-term increases.

Decreases in total aid appear to be consistent across cohorts, but per FTE aid decreases are concentrated among the earlier adopters. Decreases in the average net price are relatively consistent, although the earlier adopters may decrease their net price more than the later adopters. The 2013 cohort shows evidence that it fails the parallel trends assumption and also has the most extreme cohort-specific estimated effect. If we exclude this specific estimate, we still identify a negative relationship, but one that more closely mirrors the magnitude of effects on the non-tuition COA, thus supporting the idea that colleges may decrease net price through non-tuition levers. Additionally, the clustering among earlier cohorts may help explain the larger effect we find in our event study in the later years, which would consist solely of the initial institutions that reset tuition.

The parallel trends for the per FTE net tuition revenue show some weaknesses for the 2013 and 2017 cohort. The cohort-specific estimates for these two groups are also roughly 0, compared to mostly negative estimates for other groups. While these two cohorts may play a role in upwardly biasing the TWFE estimate, or moderating the negative effect, we are still confident in our main finding that resets do not appear to be related to net tuition revenue given how large the standard errors are in our various estimation strategies.

In Table 15, in the appendix, we estimate the two-stage DiD ATE which aligns closely with our other estimates thus providing additional evidence of our primary set of findings. Gardner’s (2021) approach uses a weighting scheme that results in the years soon after a policy change influencing the estimated ATE more than years further in the future (Cunningham, 2021). Given that tuition resets can be erased over time by tuition increases, especially smaller resets, and that the reset is usually accompanied by some marketing and publicity efforts that may have a stronger impact in the near term, we believe the two-stage DiD is a strong confirmation of our TWFE models. Despite the higher weight associated with short-term outcomes, the ATE for enrollment is still statistically insignificant.

Finally, we examine alternative definitions of a tuition reset. While Eq. 3, and the findings presented in Table 4, show a negative relationship between reset size and financial aid expenditure, net price, and non-tuition COA, using differing cut points for the reset definition allows us to compare the magnitude of estimates associated with larger resets. The findings in Table 5 suggest stronger effects on financial aid expenditures, with larger decreases in aid occurring at institutions that cut tuition by larger amounts. The net price and non-tuition COA are more stable across definitions, but the magnitude grows marginally with larger resets. These alternative definitions also help account for potential decreases in tuition that were not intentional or well publicized resets or were the result of an institution’s change in IPEDS reporting process (e.g., from flat tuition to per credit hour tuition rate).

To summarize, the falsification and robustness checks largely confirm our primary estimates that tuition resets appear to have minimal effects on enrollment in the long-run, to result in decreases in total and per FTE institutional aid, to be associated with lower average net prices and non-tuition COA estimates, and to have little impact on net tuition revenue per FTE. The main estimates do suggest that there may be a positive effect on enrollment in the first few years following the reset, but that the effect dissipates over time. We also show evidence that spending on institutional aid, the net price, and non-tuition COA decrease as the tuition reset increases.

Discussion

Enrollment

We do not find strong evidence that tuition reset policies are closely related to increases in long-term enrollment, although there is some evidence of a near-term growth. These effects are important considering the declining number of “traditional-aged” students (Grawe, 2018), who are the typical student at private nonprofit colleges, and the declining faith and interest in higher education (Marken, 2019). Tuition-dependent colleges will need to maintain enrollments for their continued operations, thus better understanding how students respond to resets is important. A range of factors related to the college-going decision may be driving these findings. First, price may not be the most determinative factor for students, or resets may not be significant enough to counteract sticker shock (Levine et al., 2022). That is, despite the reduction in sticker price, students may still opt to enroll elsewhere for non-financial reasons. While prospect theory suggests that the security associated with a tuition reset or a guaranteed discount (Kahneman & Tversky, 2013; Tversky & Kahneman, 1985)—as opposed to a discount through institutional aid that may be contingent on continued performance—would be an important incentive for students to enroll, our findings do not provide evidence that students are responding this way, except potentially in the short run. Although one explanation is that resets are too small, even using a more restrictive cutoff of 20% as the definition of a reset does not produce evidence that enrollments respond. However, if the reset is not maintained over time, the security associated with the lower tuition likely fades over time which may be contributing to the short-lived effects. Other scholars note the emotional and psychological draw that discounting through prestige-granting scholarships has for students (Birch and Rosenman, 2019; Cheslock & Riggs, 2021; Monks, 2009; van der Klaauw, 2002), which may subvert the potential enrollment effects of a tuition reset, especially if the decreased institutional aid came from cuts to these types of scholarships. A direct comparison of these theories within the context of tuition resets would provide important insights into student decision-making.

Second, students may perceive the reset as an indication of poor quality. Private institutions, which hold the highest sticker prices, may be a Veblen good; that is, a higher price may indicate a higher value to consumers (Veblen, 2005). In the past, institutions have used this Chivas Regal effect to bolster status. For example, when institutions fall in national rankings, they respond by increasing tuition prices in an attempt to increase perceived quality (Askin & Bothner, 2016). However, we posited that colleges may be lowering sticker prices to counter public narratives and outcry about the high price of college and to publicly show a commitment to affordability in an effort to become a more attractive option for students. Additional research into the effects of sustained resets would help understand these enrollment effects. Analyses using student-level qualitative and quantitative data should be considered to better understand these dynamics.

Third, despite the reset, parents and students may continue to make errors in predicting cost and ultimately overestimate how expensive the institution will be. These errors may be minimized during the initial decrease in tuition, but erased as recency of the reset fades. This factor is particularly salient for low-income and racially minoritized students (Grodsky & Jones, 2007), and suggests an avenue for further consideration.

Fourth, early effects on enrollment suggest a tuition reset may be an effective marketing tool in the short term. However, as sticker prices increase following the reset, that marketing ploy is unlikely to help. It is worth considering that long-term effects may not even be the goal or expectation given the general upward trajectory of tuition in the long run. Like other marketing tools, administrators may only expect a short-term effect. Additionally, the flexible conditional ATE presented in Table 14, while statistically insignificant, suggest that any enrollment effect may be the product of institutions with a reset experiencing small gains in enrollment while matched institutions see declines in enrollment. It is possible that near-term enrollment gains after a reset reflect a siphoning of students from similar institutions. Moreover, the short-term enrollment effects we see suggest that a tuition reset policy is not likely to be a viable solution to any long-term downward trends in student demand. Instead, the reset may only be a temporary fix to flagging enrollments. These findings strengthen the call for continued research into students’ college-going decisions.

Institutional Aid

At private nonprofit colleges, discounting the sticker price is a longstanding and increasingly common practice (Ma et al., 2020). It is not surprising that sticker prices and discount amounts have increased jointly. For private institutions, the average net price students pay dictates the total tuition revenue and thus a significant portion of most private nonprofit colleges’ budget (Archibald & Feldman, 2014). As we describe above, a tuition reset is logically accompanied by a decrease in institutional aid. Our findings support this. Both the total amount of aid and per FTE aid decrease following a reset. Moreover, the decreases in aid vary directly with reset size. That is, when a school cuts tuition by a greater percentage, it decreases institutional aid by a larger percentage compared to smaller tuition resets. This finding confirms the importance of net tuition revenue for colleges and that institutions use dynamic pricing that combines sticker price with aid awards.

Behaunek and Gansemer-Topf (2019) examined the relationship between tuition discounting and enrollment outcomes—student applications, enrollment numbers, admission rate, and enrollment by race and income—at small private baccalaureate colleges. Using a ten-year panel data set of private colleges, they found the number of institutions providing more than 95% of their incoming first-year students financial aid has increased from 2003 to 2012. During this time, there have been steady increases in the number of racial/ethnic minoritized students and Pell recipients enrolling in these colleges, while admission rates are down. From an equity perspective, it is important that future research examine the relationship between tuition reset policies and changes in the composition of student enrollments, and how these changes compare to other discounting strategies. Reset policies have the potential to be a tool to lower the perceived cost barrier for historically underserved students and thus increase access.

Net Price and Tuition Revenue

The combination of the decrease in sticker price and the decrease in institutional aid associated with a tuition reset policy combine to have an effect on the average net price students pay. Our findings show a negative impact on net price. Although colleges that reset tuition also decreased financial aid, this decrease does not appear to be enough to fully offset the drop in sticker price. This means colleges that reset tuition are, on average, less expensive than they previously were. However, the decrease in net price comes with a decrease in non-tuition COA. The price students pay include these non-tuition elements such as books, supplies, room, board, and transportation. Following a reset, institutions lower the expected cost of these expenses by five to eight percent. A tuition reset, however, is only a reduction of the published tuition price, not a change in the costs of transportation or books. We know that colleges typically accompany their reset with substantial marketing and publicity efforts (Lapovsky, 2019), and our findings suggest they may employ strategies that make the overall cost of attendance appear lower by reducing the estimated cost of non-tuition expenses. While this may be an additional marketing or recruitment tool, it may also reflect an effort to recalibrate estimated costs alongside the tuition reset. Future research should examine the accuracy of these changes to non-tuition COA to better discern between an earnest recalibration and marketing tool.

Net Tuition Revenue

This decrease in net price runs counter to larger trends among private nonprofit colleges. Despite increasing levels of discounting, colleges have maintained a relatively flat net price (Ma et al., 2020). Our findings suggest that per FTE net tuition revenue remains stable after a reset. While point estimates are negative, they are statistically insignificant across our models and robustness checks. Although some colleges may use a reset for marketing purposes or as a public commitment to affordability, they adjust aid expenditures in a way that leaves per FTE net tuition revenue flat. In other words, students are still giving institutions the same amount of tuition before and after a reset.

Private nonprofit colleges have largely embraced the high aid/high tuition model, but a tuition reset may indicate a shift to a low aid/low tuition model where sticker price is closer to the actual net price paid. A tuition reset essentially redistributes institutional discounting evenly across students, rather than having enrollment managers award dollars to individual students on an ad hoc basis. Because merit-aid, which comprises a growing share of institutional discounts, is awarded disproportionately to wealthy and white students, a tuition reset may create a more egalitarian set of net prices across groups of students. It is important for future research to examine the potential equity impacts of such a shift away from the high aid/high tuition model, even if the average net tuition revenue remains flat.

Conclusion

In this study we examine the relationship between tuition reset policies and enrollment, institutional aid expenditures, and net tuition prices. Our findings suggest that colleges behave in a revenue maximizing manner and reduce institutional aid as they cut sticker prices. We provide evidence that institutions with the largest tuition resets also cut aid by the largest amounts. Although colleges may be revenue maximizing in their strategies, tuition resets do not appear to have significant or sustained effects on enrollment and are unlikely to help counter flagging demand. Counter to expectations, net price decreases following a reset. However, this decrease is accompanied by a decrease in non-tuition COA which suggests colleges may reduce the expected costs of books, transportation, and other non-tuition expenses as an earnest recalibration of costs during the reset process or as an additional way to market lower prices to students. Importantly, net tuition revenue per FTE does not appear to decrease after a tuition reset, suggesting students are still facing similar tuition bills. We believe it is important for future research to discern between these approaches to better understand reset policies.

This study is among the first to explore the effects of a tuition reset policy. Although we find minimal overall effects on enrollment, additional research on the promise of tuition reset policies to increase access for underserved groups of students is important. Additionally, we suggest future work examine student decision making in the face of a reset. While we used a five percent cutoff as our definition of a tuition reset to mirror previous research (Lapovsky, 2019), we explored how the effects vary with the size of the reset and what the effects were using alternative cutoffs. The effects were consistent in their significance across these tests and the magnitude appeared to grow as the reset grew. The variability in reset sizes and effects leads us to conclude that more attention should be paid to defining resets. Scholars examining this topic should also consider differentiating resets by other accompanying factors, such as change non-tuition COA simultaneously. Bringing additional nuance to the definition of a tuition reset can help future work more clearly identify effects and the contexts in which they occur.

Notes

To address this we incorporated advertising expenditures which colleges are required to report on their annual 990 forms they submit to the Internal Revenue Service. We use the 990 data collected and compiled by the Urban Institute (2021). The data are only available from 2011 through 2016. As such, we conducted an additional set of analysis following Callaway and Sant’Anna’s approach to staggered treatment timing using a more limited set of years and treated institutions. Using this limited sample we estimated models with and without the inclusion of advertising dollars and found that this variable had no significant impact on the estimated treatment effects. Given the limited sample included in these analyses, we do not include these findings with the main estimates shown in our findings section. However, these findings assuage concerns that advertising dollars may significantly alter our main estimates. These estimates are available upon request from the corresponding author.

Scatter plots comparing reset size to the year-by-year changes of each outcome do not suggest any non-linear relationships. As such, we are comfortable using the linear interpretation to estimate heterogeneous effects.

References

Allen, D., & Wolniak, G. C. (2019). Exploring the effects of tuition increases on racial/ethnic diversity at public colleges and universities. Research in Higher Education, 60(1), 18–43.

Archibald, R. B., & Feldman, D. H. (2014). Why does college cost so much?. Oxford University Press.

Armitage, A. S. (2018). Outcomes of tuition resets at small, private, not-for-profit institutions [Doctoral dissertation, University of Pennsylvania]. Scholarly Commons.

Askin, N., & Bothner, M. S. (2016). Status-aspirational pricing: The “Chivas Regal” strategy in US higher education, 2006–2012. Administrative Science Quarterly, 61(2), 217–253.

Baum, S., & Ma, J. (2010). Tuition discounting: Institutional aid patterns at public and private colleges and universities. Trends in Higher Education Series. College Board.

Behaunek, L., & Gansemer-Topf, A. M. (2019). Tuition discounting at small, private, baccalaureate institutions: Reaching a point of no return?Journal of Student Financial Aid, 48(3).

Bennett, W. J. (1987). Our greedy colleges. New York Times, 18, A27.

Birch, M., & Rosenman, R. (2019). How much does merit aid actually matter? Revisiting merit aid and college enrollment when some students “come anyway”. Research in Higher Education, 60(6), 760–802.

Breneman, D. W. (1994). Liberal arts colleges: Thriving, surviving, or endangered?. Brookings Institution Press.

Breneman, D. W., Doti, J. L., & Lapovsky, L. (2001). Financing private colleges and universities: The role of tuition discounting. In M. B. Paulsen, & J. C. Smart (Eds.), The finance of higher education: Theory, research, policy, and practice (pp. 461–479). Agathon Press.

Buss, C., Parker, J., & Rivenburg, J. (2004). Cost, quality and enrollment demand at liberal arts colleges. Economics of Education Review, 23(1), 57–65.

Butts, K., & Gardner, J. (2021). did2s: Two-stage difference-in-differences. arXiv preprint arXiv:2109.05913.

Callaway, B., Goodman-Bacon, A., & Sant’Anna, P. H. (2021). Difference-in-Differences with a Continuous Treatment. arXiv preprint arXiv:2107.02637.

Callaway, B., & Sant’Anna, P. H. (2020). Difference-in-differences with multiple time periods.Journal of Econometrics.

Cengiz, D., Dube, A., Lindner, A., & Zipperer, B. (2019). The effect of minimum wages on low-wage jobs. The Quarterly Journal of Economics, 134(3), 1405–1454.

Cheslock, J. J. (2006). Applying economics to institutional research on higher education revenues. New Directions for Institutional Research, 132, 25–41.

Cheslock, J. J., & Riggs, S. O. (2021). Psychology, market pressures, and pricing decisions in higher education: the case of the US private sector. Higher Education, 81(4), 757–774.

Conger, D., & Turner, L. J. (2015). The impact of tuition increases on undocumented college students’ attainment (No. w21135). National Bureau of Economic Research.

Cunningham, S. (2021). Two stage did and taming the did revolution. Causal Inference: The Remix Substack. Retrieved from: https://causalinf.substack.com/p/two-stage-did-and-taming-the-did.

Deming, D., & Dynarski, S. (2010). College aid. In P. B. Levine, & D. J. Zimmerman (Eds.), Targeting investments in children: Fighting poverty when resources are limited (pp. 283–302). University of Chicago Press.

Dettmann, E., Giebler, A., & Weyh, A. (2019). flexpaneldid: A Stata command for causal analysis with varying treatment time and duration (No. 5/2019). IWH Discussion Papers.

Duffy, E. A., & Goldberg, I. (2014). Crafting a class: College admissions and financial aid, 1955–1994 (77 vol.). Princeton University Press.

Gardner, J. (2021). Two-stage differences in differences. Unpublished working paper.

Goodman-Bacon, A. (2018). Difference-in-differences with variation in treatment timing (No. w25018). National Bureau of Economic Research.

Goldrick-Rab, S. (2016). Paying the price: College costs, financial aid, and the betrayal of the American dream. University of Chicago Press.

Grawe, N. D. (2018). Demographics and the demand for higher education. JHU Press.

Grodsky, E., & Jones, M. T. (2007). Real and imagined barriers to college entry: Perceptions of cost. Social Science Research, 36(2), 745–766.

Han, Y., Ryan, M. P., & Manley, K. (2019). Online course enrolment and tuition: empirical evidence from public colleges in Georgia, USA. International Journal of Education Economics and Development, 10(1), 1–21.

Hearn, J. C., Griswold, C. P., & Marine, G. M. (1996). Region, resources, and reason: A contextual analysis of state tuition and student aid policies. Research in Higher Education, 37(3), 141–178.

Heller, D. E. (1997). Student price response in higher education: An update to Leslie and Brinkman. The Journal of Higher Education, 68(6), 624–659.

Heller, D. E. (1999). The effects of tuition and state financial aid on public college enrollment. The Review of Higher Education, 23(1), 65–89.

Hillman, N. W. (2012). Tuition discounting for revenue management. Research in Higher Education, 53(3), 263–281.

Hussar, B., Zhang, J., Hein, S., Wang, K., Roberts, A., Cui, J., Smith, M., Bullock Mann, F., Barmer, A., & Dilig, R. (2020). The Condition of Education 2020 (NCES 2020 – 144). U.S. Department of Education, National Center for Education Statistics.

Jones, S. M., & Andrews, M. (2021). Stranded credits: a matter of equity. Ithaka S + R.

Kahneman, D., & Tversky, A. (2013). Prospect theory: An analysis of decision under risk. In Handbook of the fundamentals of financial decision making: Part I (pp. 99–127).

Kim, M. (2010). Early decision and financial aid competition among need-blind colleges and universities. Journal of Public Economics, 94(5–6), 410–420.

Krupnick, M. (2018). Bending to the law of supply and demand, some colleges are dropping their prices. Hechinger Report.

Langelett, G., Chang, K., Akinfenwa, S. O., Jorgensen, N., & Bhattarai, K. (2015). Elasticity of demand for tuition fees at an institution of higher education. Journal of Higher Education Policy and Management, 37(1), 111–119.

Lapovsky, L. (2019). Do price resets work? https://lapovsky.com/wp-content/uploads/2010/07/Do-Price-Resets-Work-.pdf

Leslie, L. L., & Brinkman, P. T. (1988). The economic value of higher education. American Council on Education.

Levine, P. B., Ma, J., & Russell, L. C. (2022). Do college applicants respond to changes in sticker prices even when they don't matter? Education Finance and Policy. https://doi.org/10.1162/edfp_a_00372

Ma, J., Pender, M., & Libassi, C. J. (2020). Trends in college pricing 2019. College Board.

Manski, C., & Wise, D. (1983). College choice in America. Harvard University Press.

Marcotte, D. E., & Delaney, T. Public higher education costs and college enrollment. EdWorkingPaper No. 21–464.

Marken, S. (2019). Half in U.S. now consider college education very important. Gallup.

Monks, J. (2009). The impact of merit-based financial aid on college enrollment: A field experiment. Economics of Education Review, 28(1), 99–106.

Nguyen, T. D., Kramer, J. W., & Evans, B. J. (2019). The effects of grant aid on student persistence and degree attainment: A systematic review and meta-analysis of the causal evidence. Review of Educational Research, 89(6), 831–874.

Price, G., & Sheftall, W. (2015). The price elasticity of freshman enrollment demand at a historically black college for males: Implications for the design of tuition and financial aid pricing schemes that maximize Black male college access. The Journal of Negro Education, 84(4), 578–592.

Rios-Avila, F. (2021). CSDID Version 1.6. Github.

Runco, M., Francisco, J., & Bates, J. (2019). The impact of tuition increases on enrollment in the state of alabama public colleges and universities. Economics Bulletin, 39(4), 2697–2705.

Schochet, P. Z. (2021). Statistical Power for Estimating Treatment Effects Using Difference-in-Differences and Comparative Interrupted Time Series Estimators With Variation in Treatment Timing.Journal of Educational and Behavioral Statistics,10769986211070625.

Seltzer, R. (2020). April 27). Pricing pressures escalate. Inside Higher Ed.

Smith, D. O. (2019). University finances: Accounting and budgeting principles for higher education. Johns Hopkins University Press.

Turner, S. (2018). The evaluation of the high tuition, high aid debate. Change: The magazine of higher learning, 50(3–4), 142–148.

Tversky, A., & Kahneman, D. (1985). The framing of decisions and the psychology of choice. In Behavioral decision making (pp. 25–41). Boston, MA: Springer.

van der Klaauw, W. (2002). Estimating the effect of financial aid offers on college enrollment: A regression–discontinuity approach. International Economic Review, 43(4), 1249–1287.

Veblen, T. (2005). The theory of the leisure class: An economic study of institutions. Aakar Books.

National Student Clearinghouse (2022). Current term enrollment estimates.National Student Clearinghouse. https://nscresearchcenter.org/current-term-enrollment-estimates/.

Funding

Funding was provided by National Science Foundation (Grant No.: 1749275).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

See Tables 6, 7, 8, 9, 10, 11, 12, 13, 14, 15 and Fig. 2.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ward, J.D., Corral, D. Resetting Prices: Estimating the Effect of Tuition Reset Policies on Institutional Finances and Enrollment. Res High Educ 64, 862–892 (2023). https://doi.org/10.1007/s11162-022-09723-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11162-022-09723-6